Conversational Image Generation Tool for Open WebUI

Just made an image generation tool for Open WebUI that supports conversational image generation like ChatGPT.

Check it out: https://openwebui.com/t/olof/flux_ultra_replicate_image

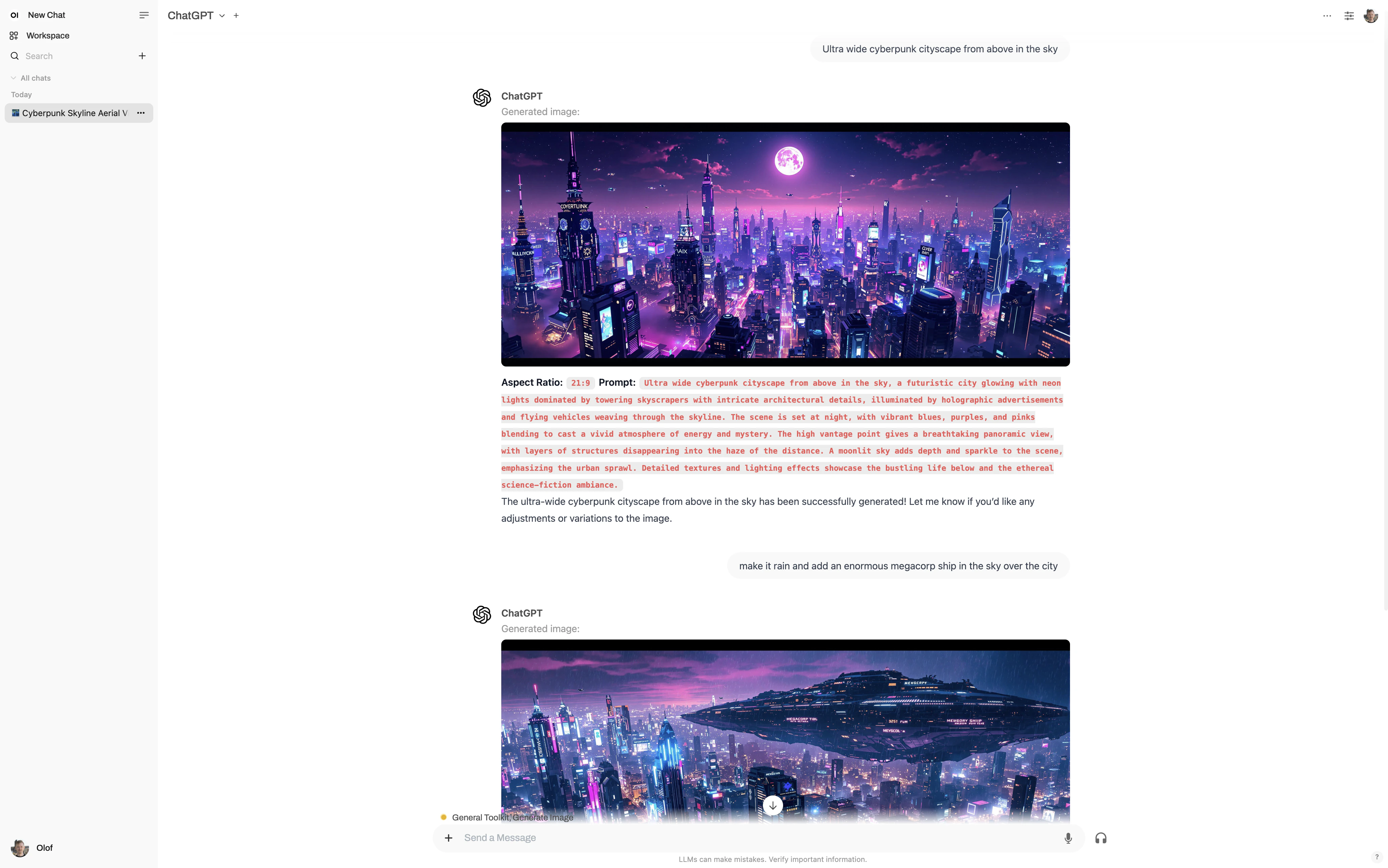

📷 Usage Screenshot

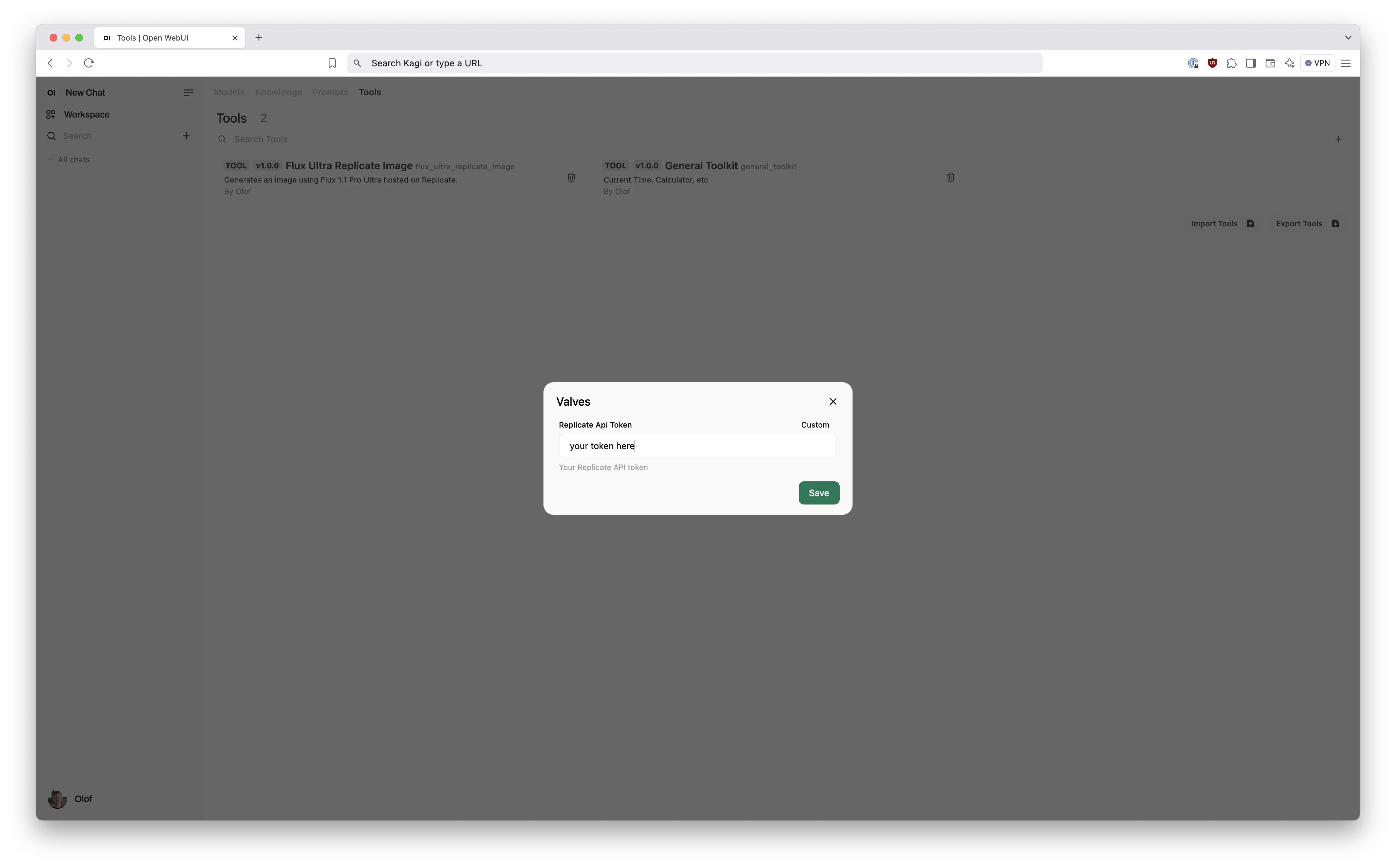

📷 Setup Screenshot

💡 The Core Idea

I really like the ChatGPT approach to image generation where you "talk your way" to the final image by asking for changes. It is way easier than doing the actual image prompt engineering yourself IMO. You converse with an LLM instead, which does the image prompt engineering for you.

That conversational user experience is not supported by default in Open WebUI. Nor was it supported by the first community solution I tried out https://openwebui.com/f/balaxxe/flux_1_1_pro_ultra.

Turns out that for conversational mode to work the image generator must be a "tool" and not a "function". There was a few tools out there already, but I made a new one with the following key aspects in mind:

- Leverage Replicate synchronous API properly to make just a single request.

- Prompt engineer around the temporal confusion that happens as we speak on behalf of the LLM to show the image.

- Minimal Valves - Just a single one for the REPLICATE_API_TOKEN.

- The image aspect ratio is set conversationally.

- Always return JPG. Because PNG is too large, and WEBP isn't compatible with Google Docs and others.

Simple and a bit opinionated.

Convention over configuration.

💕 Thank You

Thank you to the community for making these inspiration sources:

- https://openwebui.com/t/justinrahb/image_gen

- https://openwebui.com/f/balaxxe/flux_1_1_pro_ultra

- https://openwebui.com/t/heng/flux_pro_image_generation_with_the_bfl_api

- https://openwebui.com/t/hasanraiyan/image_generation_using_pollination_ai

- https://openwebui.com/t/haervwe/create_image_hf

📝 Full Code

"""

title: Flux Ultra Replicate Image

description: Generate images with Flux 1.1 Pro Ultra hosted on Replicate.

author: Olof Larsson

author_url: https://olof.tech/conversational-image-generation-tool-for-open-webui/

version: 1.1.1

license: MIT

"""

from typing import (

Literal,

)

import requests

import os

import json

from pydantic import BaseModel, Field

# Learn more here:

# https://olof.tech/conversational-image-generation-tool-for-open-webui/

# Thank you to the community for making these inspiration sources:

# - https://openwebui.com/t/justinrahb/image_gen

# - https://openwebui.com/f/balaxxe/flux_1_1_pro_ultra

# - https://openwebui.com/t/heng/flux_pro_image_generation_with_the_bfl_api

# - https://openwebui.com/t/hasanraiyan/image_generation_using_pollination_ai

# - https://openwebui.com/t/haervwe/create_image_hf

ImageAspectRatioType = Literal[

"21:9", "16:9", "3:2", "4:3", "5:4", "1:1", "4:5", "3:4", "2:3", "9:16", "9:21"

]

class Tools:

class Valves(BaseModel):

REPLICATE_API_TOKEN: str = Field(

default="", description="Your Replicate API token"

)

def __init__(self):

self.valves = self.Valves(

REPLICATE_API_TOKEN=os.getenv("REPLICATE_API_TOKEN", ""),

)

async def generate_image(

self,

image_prompt: str,

image_aspect_ratio: ImageAspectRatioType,

__event_emitter__=None,

) -> str:

"""

Generate an image given a prompt

Whenever a description of an image is given, use this tool to create the image from a prompt.

You do not need to ask for permission to generate, just do it!

DO NOT write out the prompt before OR after generating the images. The prompt should ONLY ever be written out ONCE, in the `"image_prompt"` field of the request.

The image_prompt sent to this tool must abide by the following policies:

1. The prompt should be in English. If the image description was not in English, then translate it.

2. Always mention the image type (photo, oil painting, watercolor painting, illustration, cartoon, drawing, vector, render, etc.) in the beginning of the prompt. Unless the description suggests otherwise.

3. The prompt must intricately describe every part of the image in concrete, objective detail. THINK about what the end goal of the description is, and extrapolate that to what would make satisfying images.

4. The prompt should be a paragraph of text that is extremely descriptive and detailed. It should be more than 3 sentences long.

5. If the user requested modifications to a previous image, the prompt should not simply be longer, but rather it should be refactored to integrate the suggestions.

The image_aspect_ratio should be selected by picking the most suitable one for the image.

- If the user suggests or implies an aspect ratio, then use that, or the closest valid one.

- "3:4" should be used for upper body shots and similar.

- "2:3" should be used for full body portraits and similar.

- "21:9" for panoramas or ultra wide images.

- "16:9" is otherwise the default recommended aspect ratio.

:param image_prompt: Text prompt for image generation.

:param image_aspect_ratio: Aspect ratio for the generated image.

"""

try:

# I don't know why, but emitting the event twice is required for it to appear in the UI.

for _ in range(2):

await __event_emitter__(

{

"type": "status",

"data": {"description": "Generating image ...", "done": False},

}

)

replicate_api_token = self.valves.REPLICATE_API_TOKEN

if not replicate_api_token:

raise ValueError("REPLICATE_API_TOKEN is not set")

image = generate_image_with_replicate_flux_pro_ultra(

replicate_api_token,

image_prompt,

image_aspect_ratio,

)

await __event_emitter__(

{

"type": "status",

"data": {"description": "Generated image:", "done": True},

}

)

await __event_emitter__(

{

"type": "message",

"data": {

"content": f" \n**Aspect Ratio:** `{image_aspect_ratio}` **Prompt:** `{image_prompt}` \n"

},

}

)

# This aims to work around an LLM "temporal confusion" problem.

# It gets confused when we invisibly speak on it's behalf.

return f"""

The image generation completed successfully!

Note that the generated image ALREADY HAS been automatically sent and displayed to the user by the tool.

You don't need to do anything to show the image to the user.

When you answer now - simply tell the user the image was successfully generated.

Answer with text only, NO IMAGES, NO ASPECT RATIO, NO IMAGE PROMPT.

Just tell the user that the image was successfully generated.

At the end of your answer you might ask the user if they want anything changed to the image.

Should they later come back and ask for changes - just generate a new image with potentially modified parameters based on their feedback.

Keep your answer short and concise.

"""

except Exception as e:

await __event_emitter__(

{

"type": "status",

"data": {"description": f"An error occurred: {e}", "done": True},

}

)

return f"Tell the user: {e}"

def generate_image_with_replicate_flux_pro_ultra(

replicate_api_token: str,

prompt: str,

aspect_ratio: ImageAspectRatioType,

) -> str:

url = "https://api.replicate.com/v1/models/black-forest-labs/flux-1.1-pro-ultra/predictions"

headers = {

"Authorization": f"Bearer {replicate_api_token}",

"Content-Type": "application/json",

"Prefer": "wait=60",

}

payload = {

"input": {

"raw": False,

"prompt": prompt,

"aspect_ratio": aspect_ratio,

"output_format": "jpg",

"safety_tolerance": 6,

}

}

response = requests.post(url, headers=headers, data=json.dumps(payload))

response.raise_for_status()

response_json = response.json()

remote_image_url = response_json.get("output", "")

return remote_image_url