Cheap ChatGPT-ish for your Org

Setup https://github.com/open-webui/open-webui for your organization, point it towards a pay-per-use LLM API, and enjoy a ChatGPT Enterprise equivalent at about 1/5 the cost.

💸 They all want 30 bucks!

Have you noticed how enterprise AI solutions all seem to be priced at 30 bucks per user and month these days?

For example:

- ChatGPT:

30USDmonthly or25USDif annually (https://openai.com/chatgpt/pricing/) - Sana:

35EURmonthly or30EURif annually (https://sana.ai/#pricing) - Lindy:

30USDmonthly (https://www.lindy.ai/pricing) - Dust:

29EURmonthly (https://dust.tt/home/pricing) - Gemini Workspace:

30USDmonthly if annually (https://workspace.google.com/solutions/ai/#plan)

At Platform24 we recently tried Gemini for a month and it wasn't used/adopted much, so we canceled it. It think, largely because it was thirty bucks per employee and month, even for the employees that did not use it at all. A pay-per-use model would have been way more attractive!

🛣️ The road to glory

- 🤏 Pick an Open Source UI

- 🤏 Pick a pay-per-use API

- 💰 Communicate the Cost Savings

- ☸️ Hosting Setup

- ⚙️ Tweaking Config

- ❤️ Spread the Word

💡 I love these kind of horizontal tooling initiatives. I'm usually happy if I can go outside of my own team and manage to do something that helps all of the engineering teams. This initiative even has the potential to be useful for everyone in the organization (not just an engineering devtool).

🤏 1. Pick an Open Source UI

Going for Open WebUI: https://github.com/open-webui/open-webui. That project seems to be on fire right now (in a good way)! 🌟 48K stars on GitHub and 💕 289 contributors. It was previously called "Ollama WebUI" but you can use it with any OpenAI compatible API these days. It has helm charts! 💪 https://github.com/open-webui/helm-charts

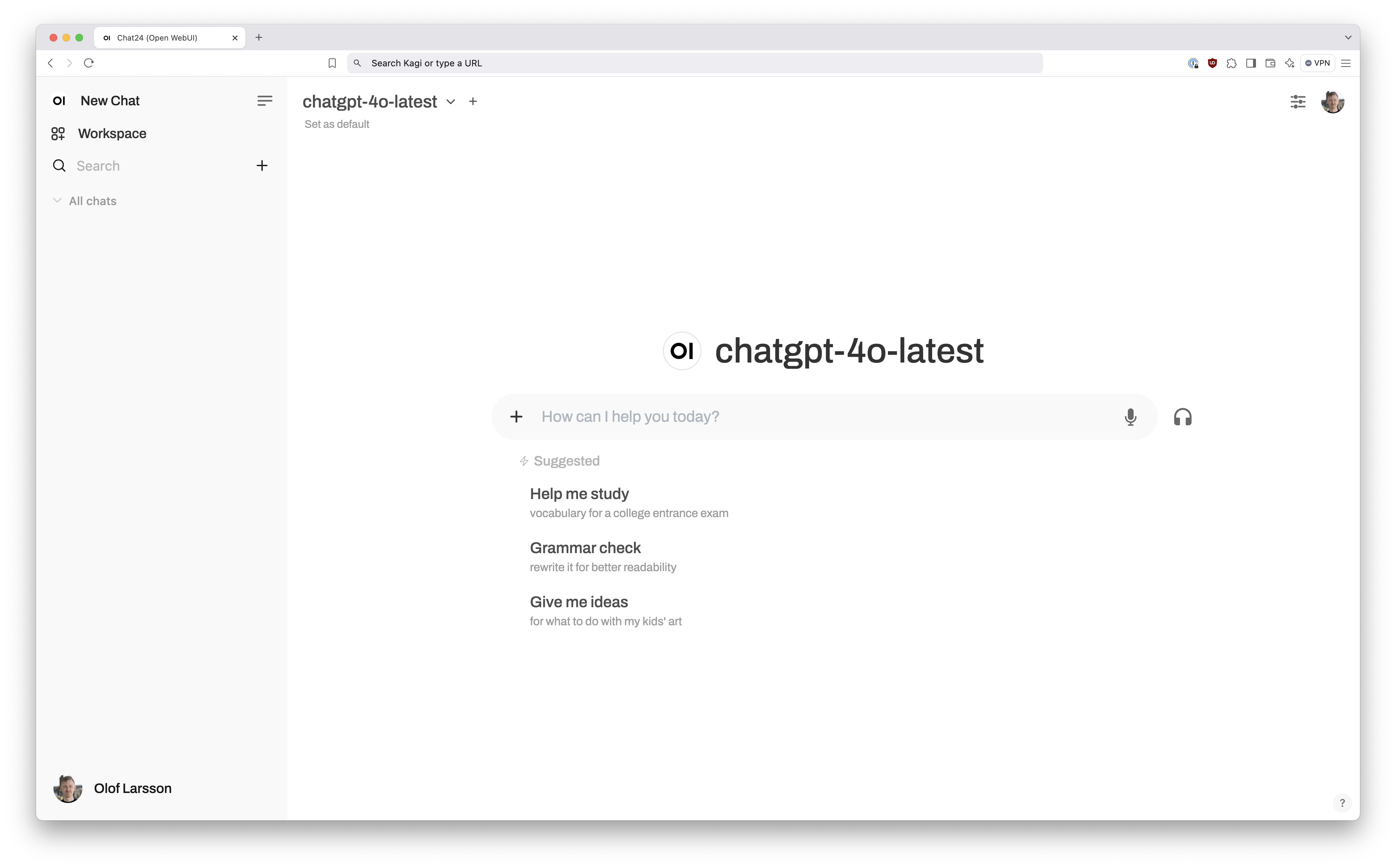

📷 What it looks like:

I took a look at some GitHub topics:

- https://github.com/topics/llm-webui

- https://github.com/topics/llm-ui

- https://github.com/topics/chatgpt

- https://github.com/topics/rag

- https://github.com/topics/ai

Based on that I tested:

- https://github.com/open-webui/open-webui

- https://github.com/Mintplex-Labs/anything-llm

- https://github.com/MoazIrfan/Any-LLM

- https://github.com/mrdjohnson/llm-x

The project https://github.com/open-webui/open-webui really stood out as a clear winner to me. It has the best looking UI, largest amount of contributors, and seems to be a truly open source project without any "paid offering".

🤏 2. Pick a pay-per-use API

Going for OpenAI Platform: https://platform.openai.com/

Because:

- 👍 It is well supported by Open WebUI

- 👍 It has all models types: LLM, STT, TTS, and embeddings

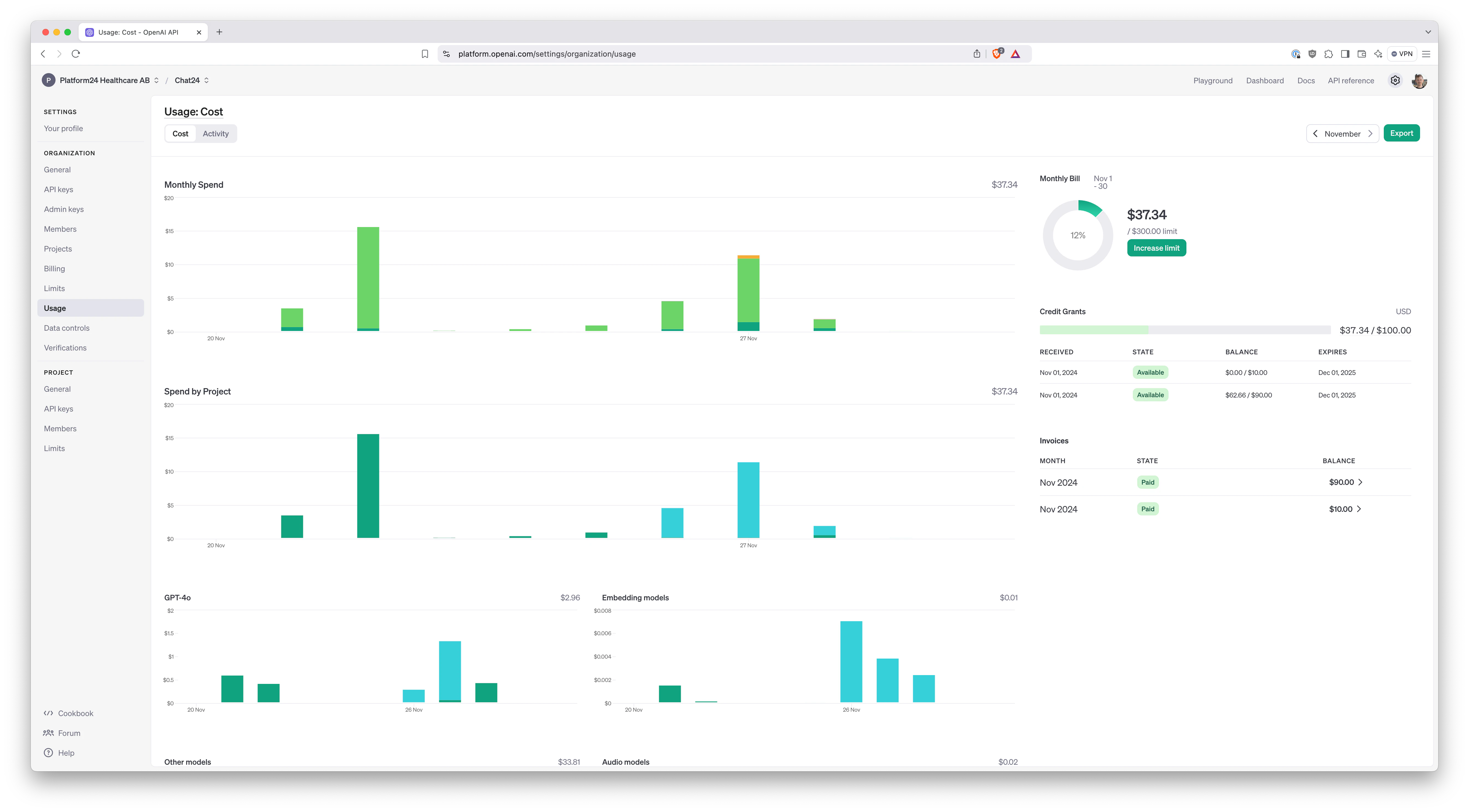

- 👍 Great admin experience with cost statistics and billing system

So by going for https://platform.openai.com/ we keep it simple!

📷 What it looks like:

That being said, many companies offer OpenAI compatible APIs. Depending on your needs, there are some great alternatives.

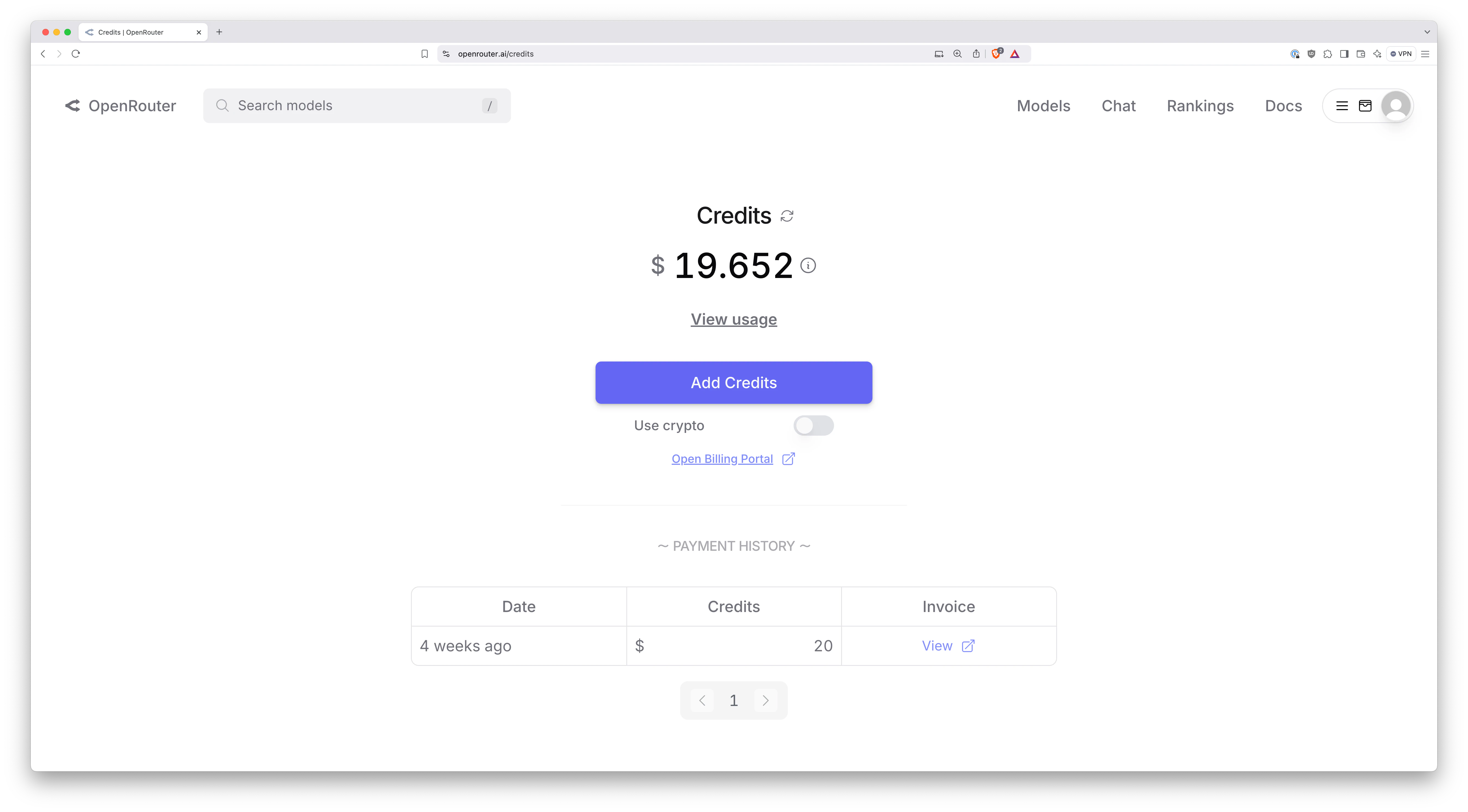

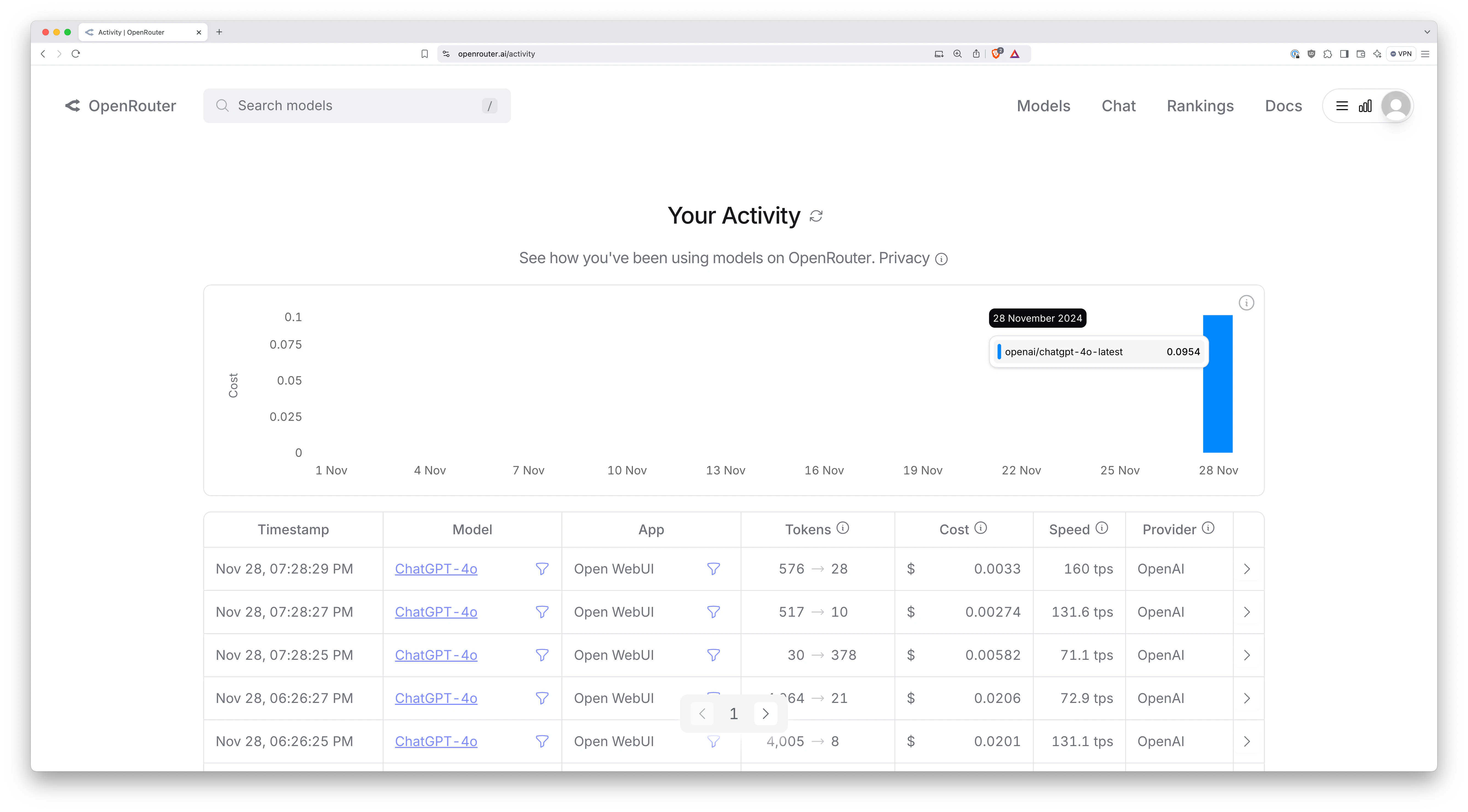

Using https://openrouter.ai/ is an interesting meta-choice:

- 👍👍👍 Gives you access to

~278models from different providers - 👎 Don't offer STT or TTS models (but you could use https://platform.openai.com/ specifically for that)

- 👎 Only support pre-paid credits for billing

- 👎 Creates a "Wild West" InfoSec situation where sensitive prompts may end up many different companies

📷 What it looks like:

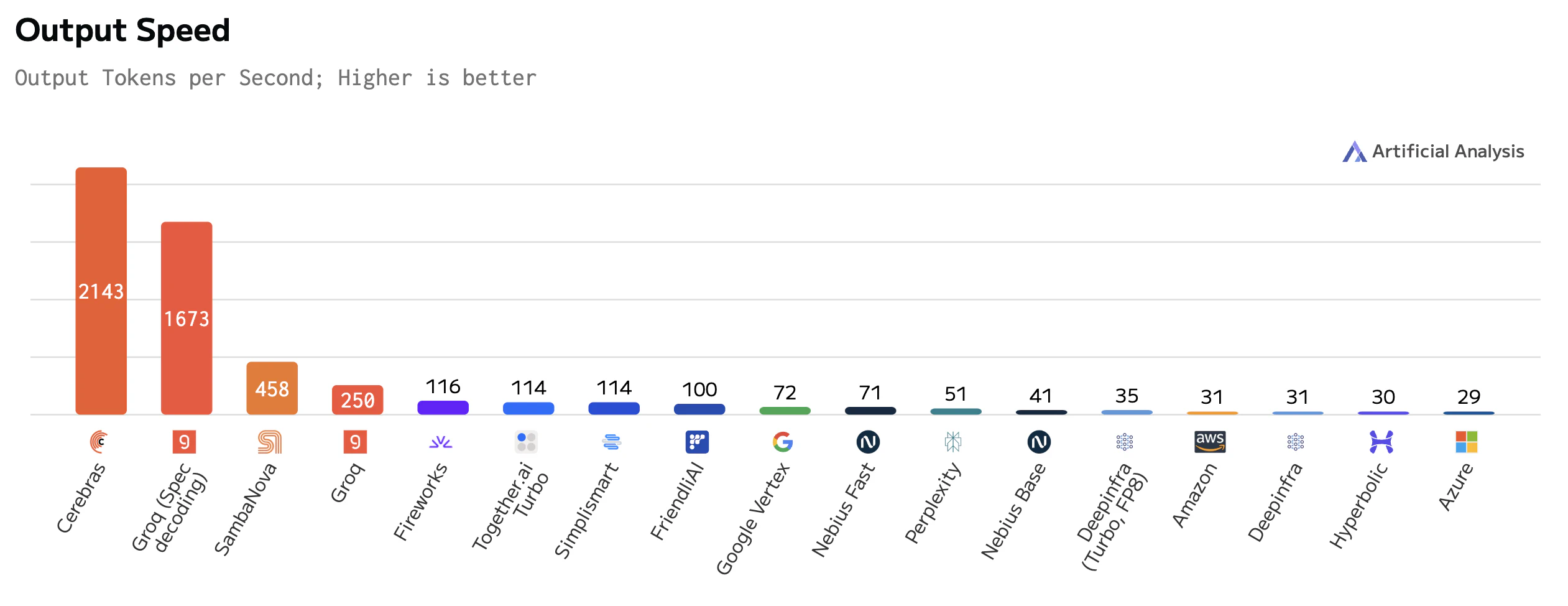

There's also those new hardware companies:

- Groq: https://console.groq.com/

- Cerebras: https://cloud.cerebras.ai/

- SambaNova: https://cloud.sambanova.ai/

🆓🤑 They offer a decent amount of inference for free!

Note that you might need a community function for SambaNova to work, such as https://openwebui.com/f/jshep/sambanova_cloud.

These companies offer Llama 3.1/3.2 inference at 🏎️ craaazy speeds: https://artificialanalysis.ai/models/llama-3-1-instruct-70b/providers

💰 3. Communicate the Cost Savings

I think a great way to communicate cost savings is by calculating and comparing the yearly costs of the alternatives.

First some facts:

- Employee Count:

100 - ChatGPT cost per user and month:

30 USD - Smallest Hetzner VPS 1 Year:

48 USD(All you need for Open WebUI) - Working days per month:

21(https://www.onfolk.com/blog/how-many-working-days-are-in-a-month-on-average) - Pay-per-use API costs per user and day:

0.2 USD(That's around 8 long GPT-4o questions)

Then yearly costs for each alternative:

- ChatGPT Enterprise:

36 000 USD(100 * 30 USD * 12) - Open WebUI + OpenAI Platform:

5 088 USD(48 USD + 100 * 21 * 12 * 0.2 USD) - Open WebUI + Groq/Cerebras/SambaNova

48 USD(Yep, they offer free inference APIs!)

💸🧠 Save 30 912 USD by going for option 2 rather than option 1 (36 000 USD - 5 088 USD)!

☸️ 4a. Hosting Setup with K8S + Helm

At Platform24 we use K8S and Helm 🏴☠️☸️, so TLDR I just used the Open WebUI helm chart: https://github.com/open-webui/helm-charts.

You'd create your own copy of the file https://github.com/open-webui/helm-charts/blob/main/charts/open-webui/values.yaml and modify it to your liking. Then to deploy:

# Add the Open WebUI helm repo

helm repo add open-webui https://helm.openwebui.com/

# Fetch the latest version of the Helm chart

helm repo update

# Optionally check which new versions became available

helm search repo open-webui --versions

# Upgrade the release with the latest version of the chart

helm upgrade --install open-webui open-webui/open-webui -f values.yaml

Environment variables can be set in the values.yaml file and with those you can configure most things: https://docs.openwebui.com/getting-started/advanced-topics/env-configuration/.

💡 Everything but authentication can also be fully configured from inside the UI! So focus on getting the authentication right first. Then you can configure the rest from the UI. The benefit of having it as env vars in values.yaml is that you can more easily nuke and recreate.

🌞 4b. Hosting Setup with Kamal + Hetzner

Don't like K8S? I too feel it's complex and overkill at times 🤡. For consistency and maintainability, you probably want to host on the infrastructure setup already there at the company you work for. But, if your hands are free, you can also use Kamal and Hetzner to host it yourself.

The https://kamal-deploy.org/ intro video is pretty great and uses Hetzner as an example.

I'll now show you how to do the following:

- Using Kamal on macOS

- Deploy Cheapest Hetzner VM

- GitHub Repo Structure - The Basics

- GitHub Repo Structure - Advanced

🌞 4b1. Using Kamal on macOS

As proposed on https://kamal-deploy.org/docs/installation I suggest using gem install kamal on macOS.

To do that you need ruby first. I recommend https://github.com/rbenv/rbenv. It goes something like this:

brew install rbenv

rbenv init

rbenv install -l

rbenv install 3.1.2

rbenv global 3.1.2

gem install kamal

It also needed a proper ssh key agent added in the terminal window. Try ssh-add -l. Does it say you have a key? Otherwise you may need to do some setup.

I personally added this to my .zshrc but have pinentry-program /opt/homebrew/bin/pinentry-mac in my gpg-agent.conf etc so your milage may vary:

# Start the SSH agent silently, but print a warning on failure

eval "$(ssh-agent -s)" > /dev/null 2>&1 || echo "Warning: Failed to start SSH agent" >&2

# Add default SSH key silently, but print a warning on failure

ssh-add --apple-use-keychain > /dev/null 2>&1 || echo "Warning: Failed to add SSH key" >&2

🌞 4b2. Deploy Cheapest Hetzner VM

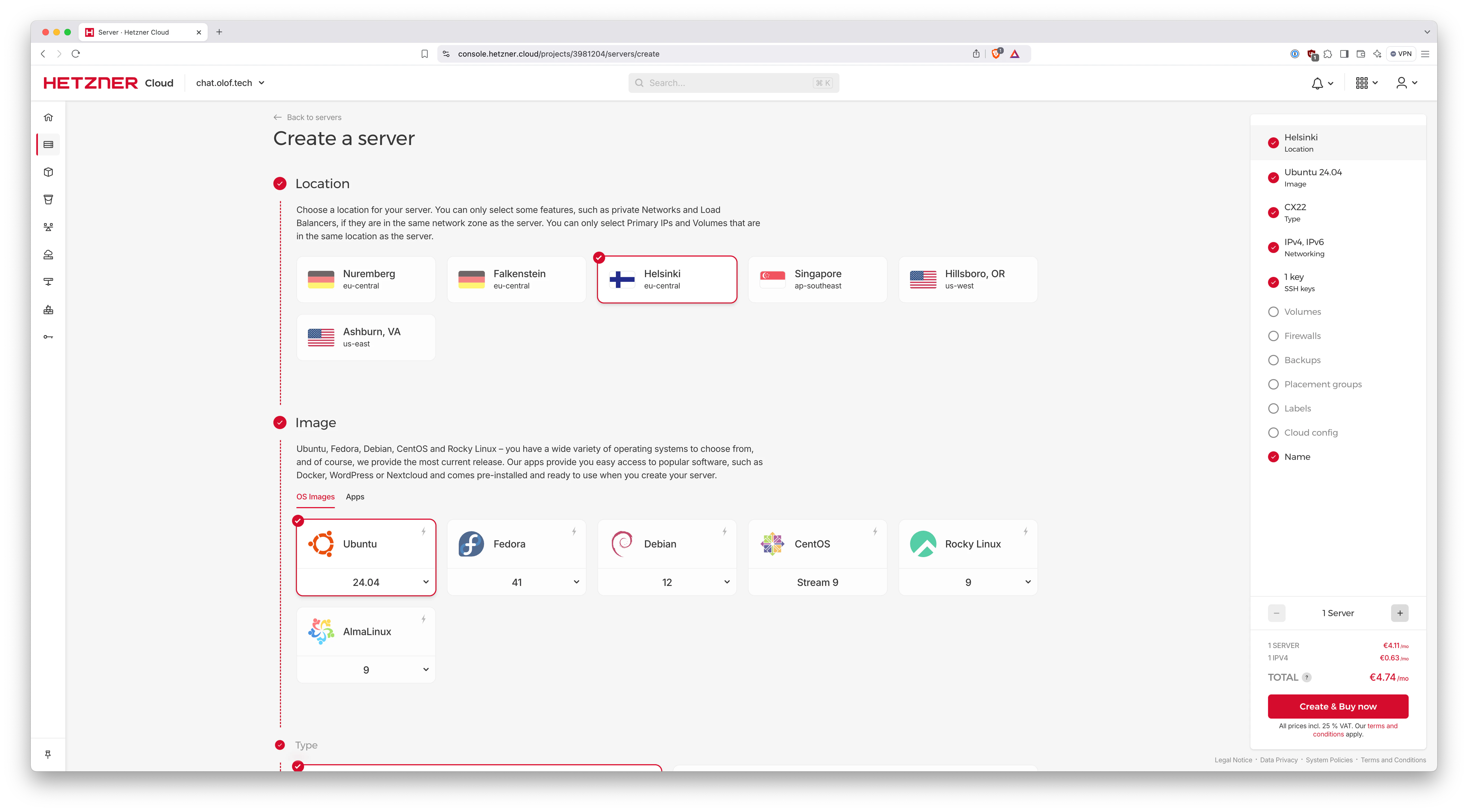

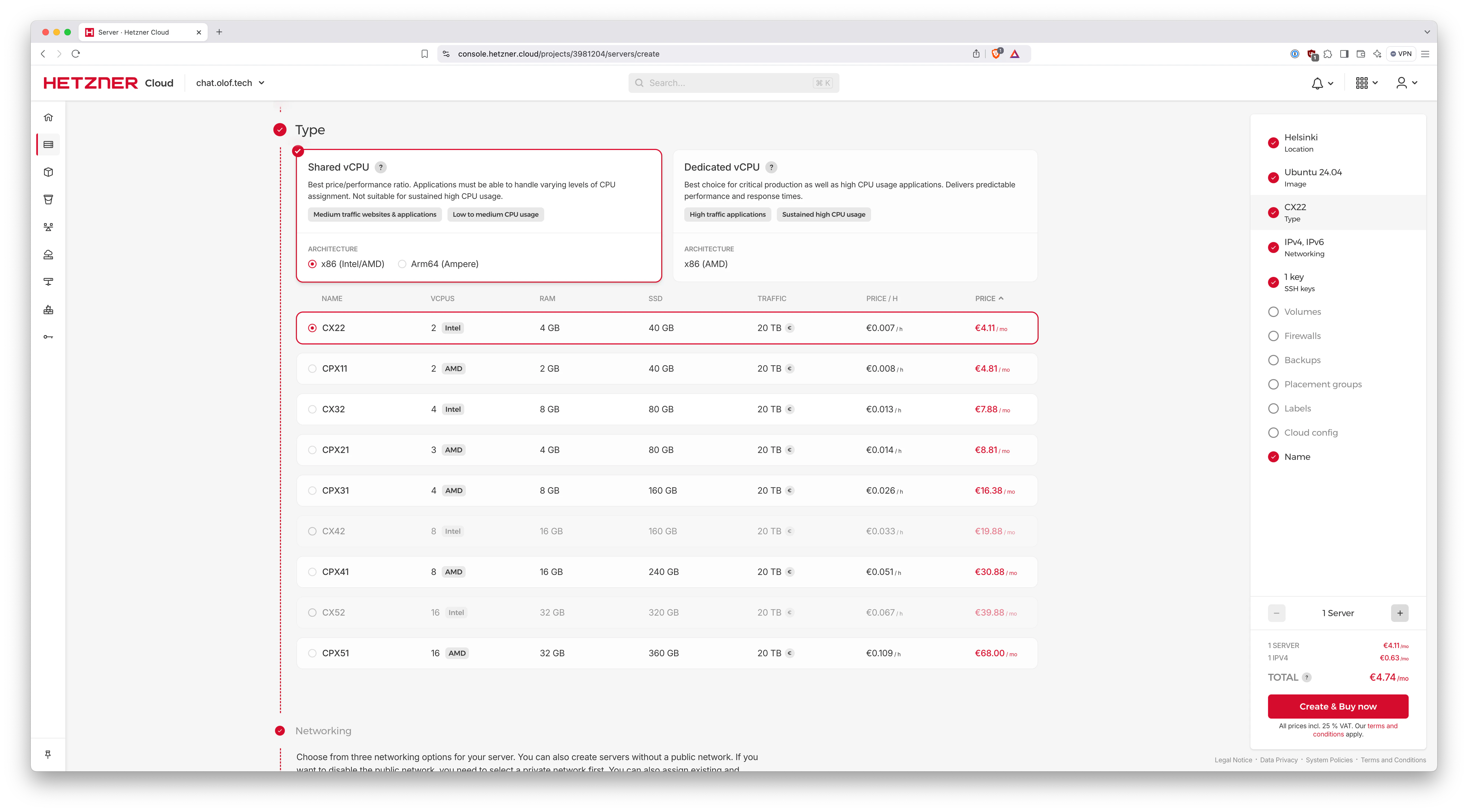

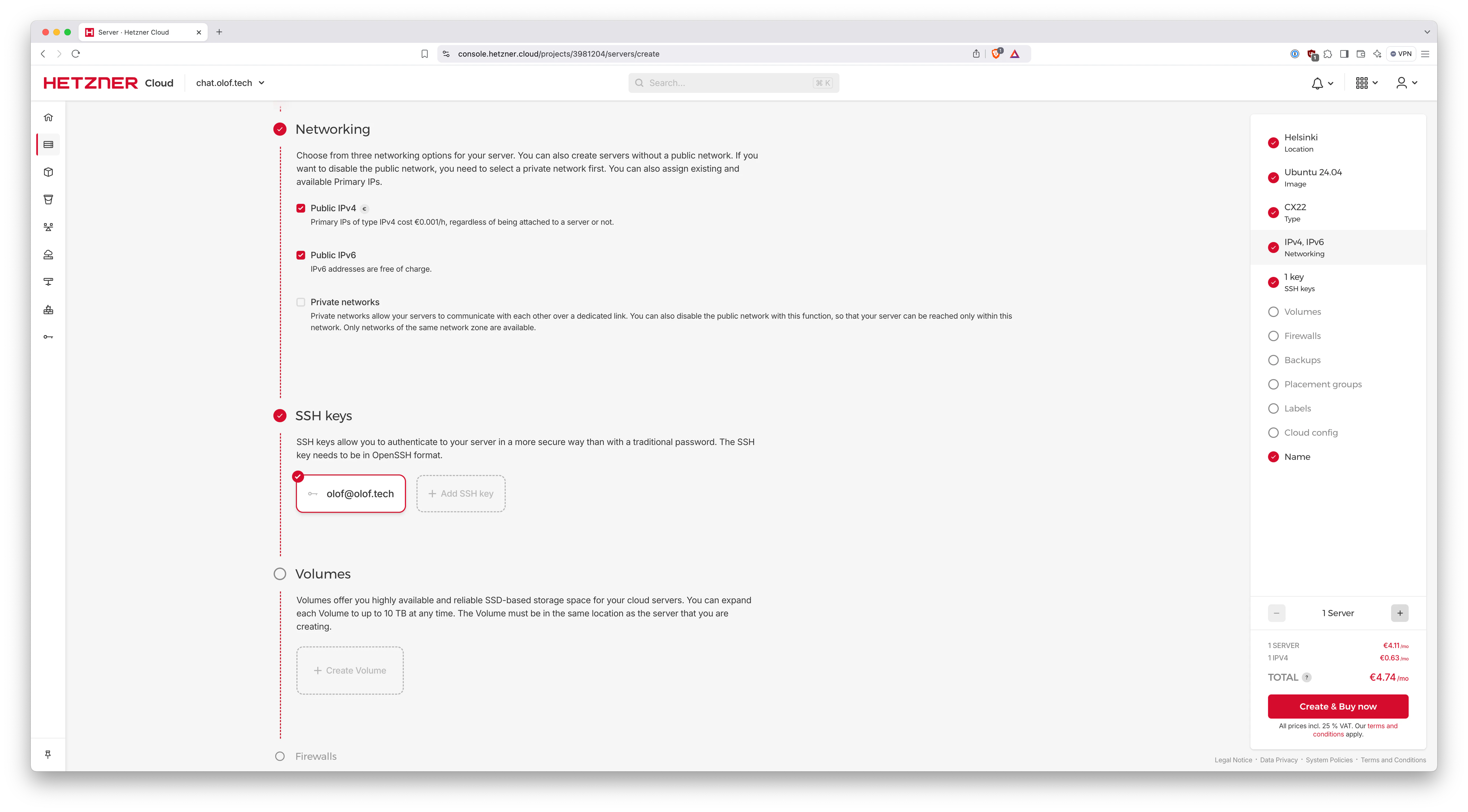

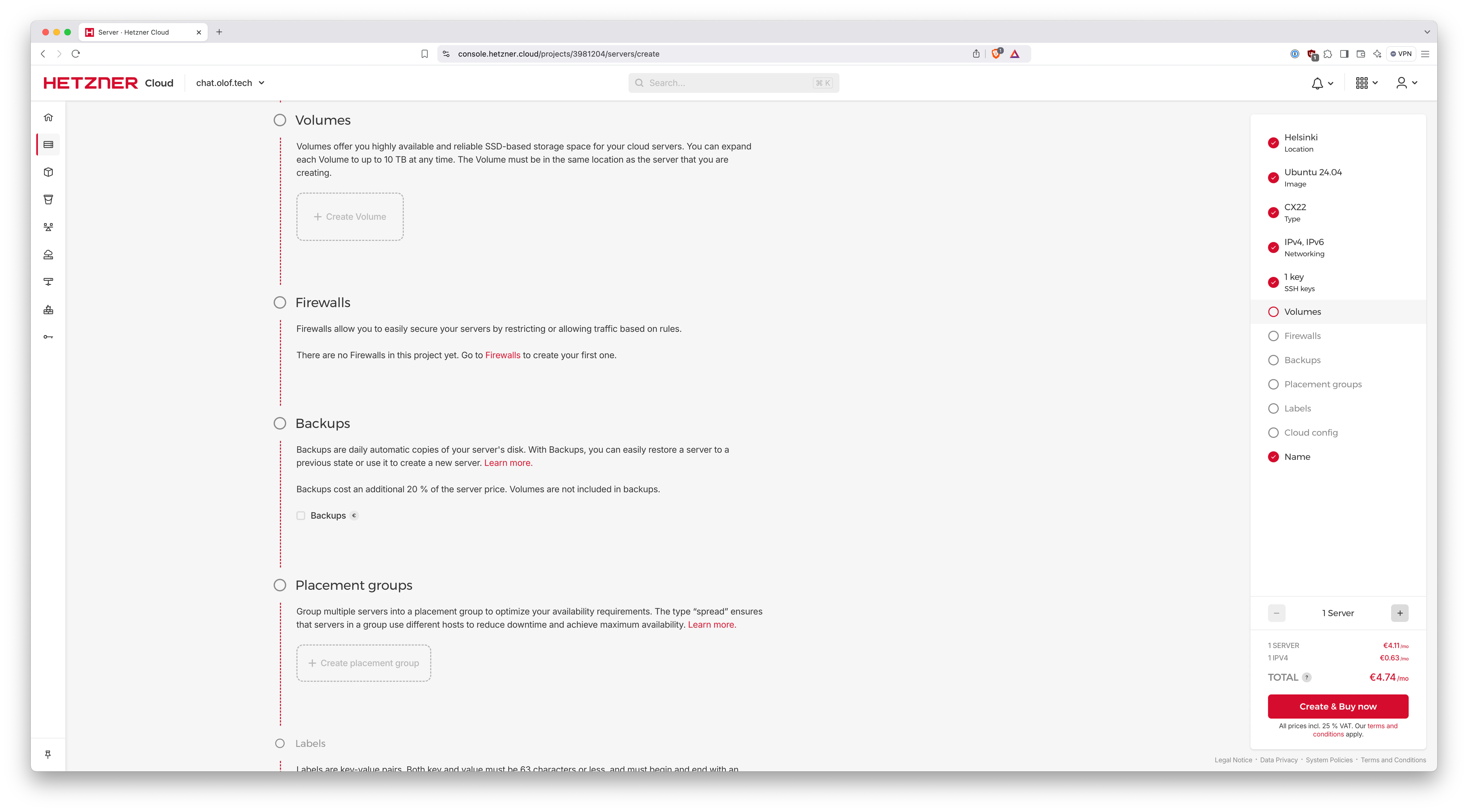

Anyhow, let's deploy the cheapest possible Hetzner VM! The choices goes like this:

| Step | Name | Value | Comment |

|---|---|---|---|

| 1 | Location | Whatever closest to you | As a Swede I chose Helsinki |

| 2 | Image | Ubuntu | That is preselected and default |

| 3 | Type | Shared vCPU x86 (Intel/AMD) CX22 | Just pick the cheapest |

| 4 | Networking | IPv4 and IPv6 | IPv4 made setup much easier |

| 5 | SSH keys | Yours | The one for you personally you have already for git etc |

| 6 | Volumes | - | not needed |

| 7 | Firewalls | - | not needed |

| 8 | Backups | - | not needed |

| 9 | Placement groups | - | not needed |

| 10 | Labels | - | not needed |

| 11 | Cloud config | - | not needed |

| 12 | Name | aichat | If your planned domain name is aichat.mydomain.com |

📷 What that'd look like:

🌞 4b3. GitHub Repo Structure - The Basics

Create a new private GitHub repo called aichat for your upcoming site aichat.mydomain.com.

Run this command inside to get started:

kamal init

Here's my proposal for a basic setup:

Dockerfile

FROM ghcr.io/open-webui/open-webui:main

That means you'll deploy the latest Open WebUI whenever you do kamal deploy.

.dockerignore

*

The purpose of this .dockerignore file is to ignore all surrounding files when we build the Dockerfile.

config/.deploy.yml

service: aichat

image: yourgithubusername/aichat

servers:

web:

- 123.123.123.123

proxy:

ssl: true

host: aichat.mydomain.com

app_port: 8080

healthcheck:

path: /

interval: 1

timeout: 60

registry:

server: ghcr.io

username: yourgithubusername

password:

- KAMAL_REGISTRY_PASSWORD

builder:

arch: amd64

# https://docs.openwebui.com/getting-started/advanced-topics/env-configuration/

env:

clear:

WEBUI_URL: "https://aichat.mydomain.com"

WEBUI_SESSION_COOKIE_SAME_SITE: "strict"

WEBUI_SESSION_COOKIE_SECURE: true

ENABLE_OLLAMA_API: false

ENABLE_OPENAI_API: true

secret:

- OPENAI_API_KEY

# Uncomment this to actually persist your data:

#volumes:

# - "open-webui:/app/backend/data"

The 123.123.123.123 is the Hetzner VM IP.

So as you can see we are using ghcr.io which is the GitHub Container Registry with a private repo on GitHub PRO plan here. Other container registries also work.

The proxy needed some tweaking. The port is 8080 and not Kamal default 80. Healthcheck instructed to check / instead of Kamal default /up.

.kamal/secrets

KAMAL_REGISTRY_PASSWORD=$KAMAL_REGISTRY_PASSWORD

OPENAI_API_KEY=$KAMAL_OPENAI_API_KEY

And in your .zshrc or similar you define:

export KAMAL_REGISTRY_PASSWORD="..."

export KAMAL_OPENAI_API_KEY="..."

With that done you can:

# First time only

kamal setup

# Every following time

kamal deploy

🌞 4b3. GitHub Repo Structure - Advanced

Here I'll show you a slightly more advanced setup.

We'll leverage a combination of https://openrouter.ai and https://platform.openai.com.

On top of that we'll configure a whole bunch of stuff via environment variables that you might as well configure inside the UI admin panel:

- Audio: STT and TTS

- RAG: Using OpenAI embeddings

- Web Search: Using the Brave Search API (https://brave.com/search/api/)

config/.deploy.yml

# ... same as before ...

# https://docs.openwebui.com/getting-started/advanced-topics/env-configuration/

env:

SCARF_NO_ANALYTICS: true

DO_NOT_TRACK: true

ANONYMIZED_TELEMETRY: false

WEBUI_AUTH: true

ENABLE_SIGNUP: true

DEFAULT_USER_ROLE: "pending"

WEBUI_URL: "https://aichat.mydomain.com"

WEBUI_SESSION_COOKIE_SAME_SITE: "strict"

WEBUI_SESSION_COOKIE_SECURE: true

ENABLE_AUTOCOMPLETE_GENERATION: false

ENABLE_EVALUATION_ARENA_MODELS: false

ENABLE_OLLAMA_API: false

ENABLE_OPENAI_API: true

OPENAI_API_BASE_URL: "https://openrouter.ai/api/v1"

TASK_MODEL_EXTERNAL: "openai/gpt-4o-mini"

ENABLE_COMMUNITY_SHARING: false

ENABLE_MESSAGE_RATING: false

ENABLE_RAG_WEB_SEARCH: true

ENABLE_SEARCH_QUERY: true

RAG_WEB_SEARCH_ENGINE: "brave"

RAG_TOP_K: 10

RAG_EMBEDDING_ENGINE: "openai"

RAG_OPENAI_API_BASE_URL: "https://api.openai.com/v1"

RAG_EMBEDDING_MODEL: "text-embedding-3-large"

RAG_EMBEDDING_OPENAI_BATCH_SIZE: 500

AUDIO_STT_ENGINE: "openai"

AUDIO_STT_OPENAI_API_BASE_URL: "https://api.openai.com/v1"

AUDIO_STT_MODEL: "whisper-1"

AUDIO_TTS_ENGINE: "openai"

AUDIO_TTS_OPENAI_API_BASE_URL: "https://api.openai.com/v1"

AUDIO_TTS_MODEL: "tts-1"

AUDIO_TTS_VOICE: "onyx"

ENABLE_IMAGE_GENERATION: false

secret:

- OPENAI_API_KEY

- BRAVE_SEARCH_API_KEY

- RAG_OPENAI_API_KEY

- AUDIO_STT_OPENAI_API_KEY

- AUDIO_TTS_API_KEY

- AUDIO_TTS_OPENAI_API_KEY

# ... same as before ...

.kamal/secrets

KAMAL_REGISTRY_PASSWORD=$KAMAL_REGISTRY_PASSWORD

OPENAI_API_KEY=$KAMAL_OPENROUTER_API_KEY

BRAVE_SEARCH_API_KEY=$KAMAL_BRAVE_SEARCH_API_KEY

RAG_OPENAI_API_KEY=$KAMAL_OPENAI_API_KEY

AUDIO_STT_OPENAI_API_KEY=$KAMAL_OPENAI_API_KEY

AUDIO_TTS_API_KEY=$KAMAL_OPENAI_API_KEY

AUDIO_TTS_OPENAI_API_KEY=$KAMAL_OPENAI_API_KEY

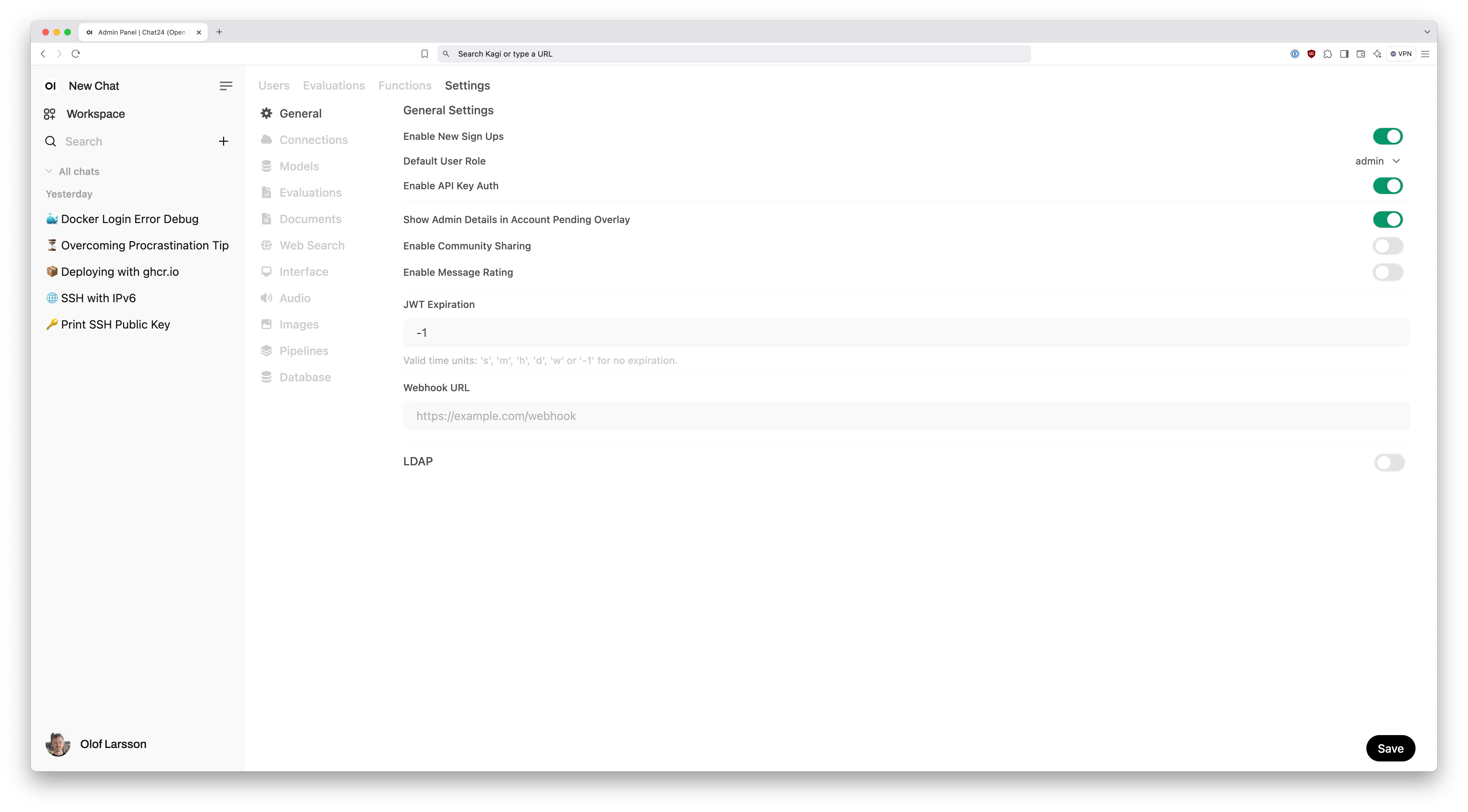

⚙️ Tweaking Configuration

⚙️ You can change most settings inside the UI. So you don't actually have to create the perfect env-var config.

🔒 The most challenging part is auth and registration.

Simplest solution is to have Default User Role: pending and then allow normal user registration.

More convenient but way harder is setting up oauth signing and registration only. If you can limit to your company email domain only you may want to set Default User Role: admin so that everyone has access to all the goodies.

😅 Setting up OAuth is hard enough to warrant a blog post on it's own. So that's for another time.

❤️ Spread the Word

👏 Okay, great job! You have setup some sort of ChatGPT Enterprise clone for your organization!

💬 Now go around and invite people. Likely they will be happy and say:

"Wow, so I can cancel my private ChatGPT subscription now? Thanks!"