Cerebras for Open WebUI

I made a Cerebras provider manifold for Open WebUI.

Check it out: https://openwebui.com/f/olof/cerebras

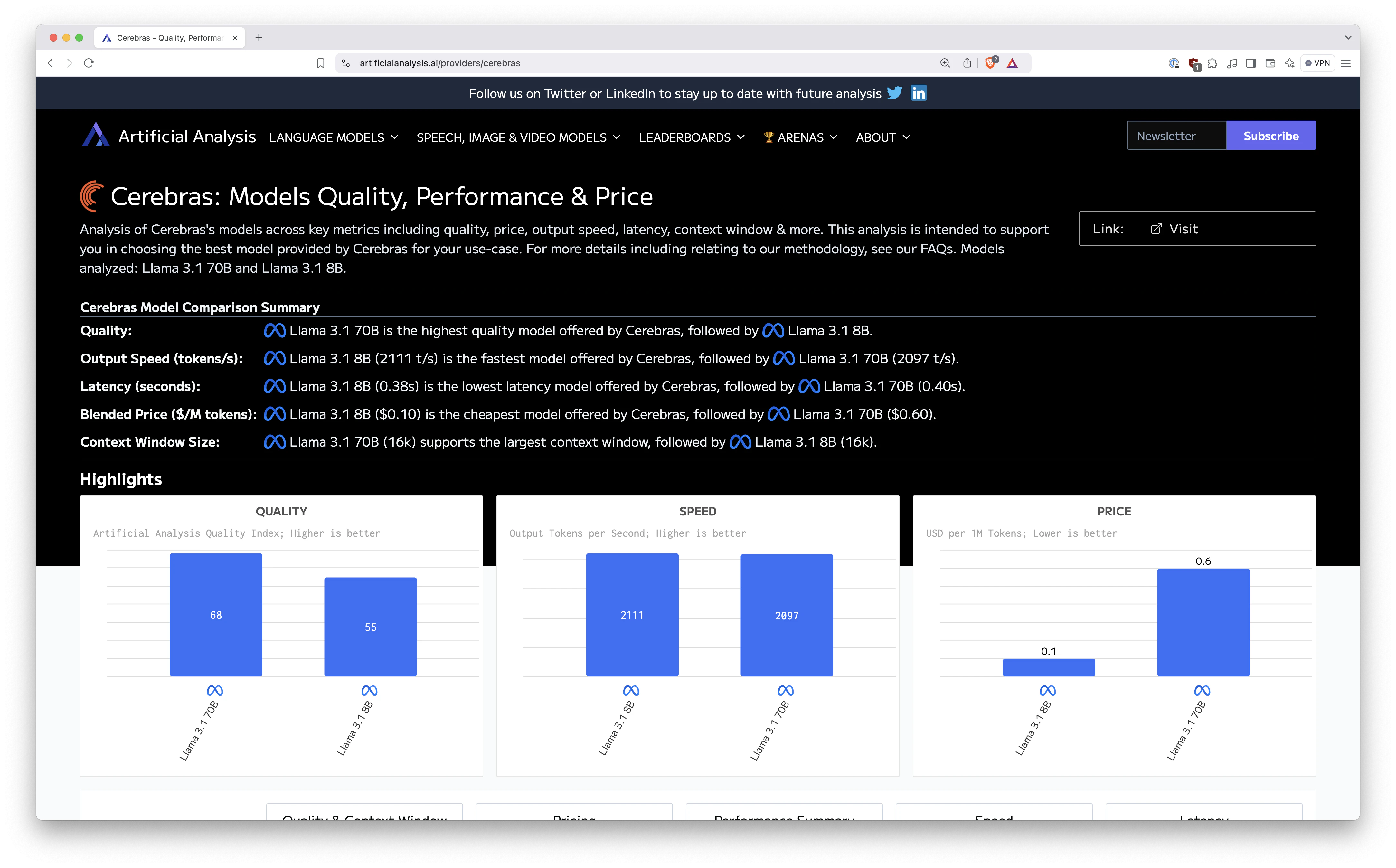

🏎️ Over 2000 tokens/s

Take a look at: https://artificialanalysis.ai/providers/cerebras

Cerebras is currently offering Llama 3.1 8b and 70b inference above 2000 tokens/s.

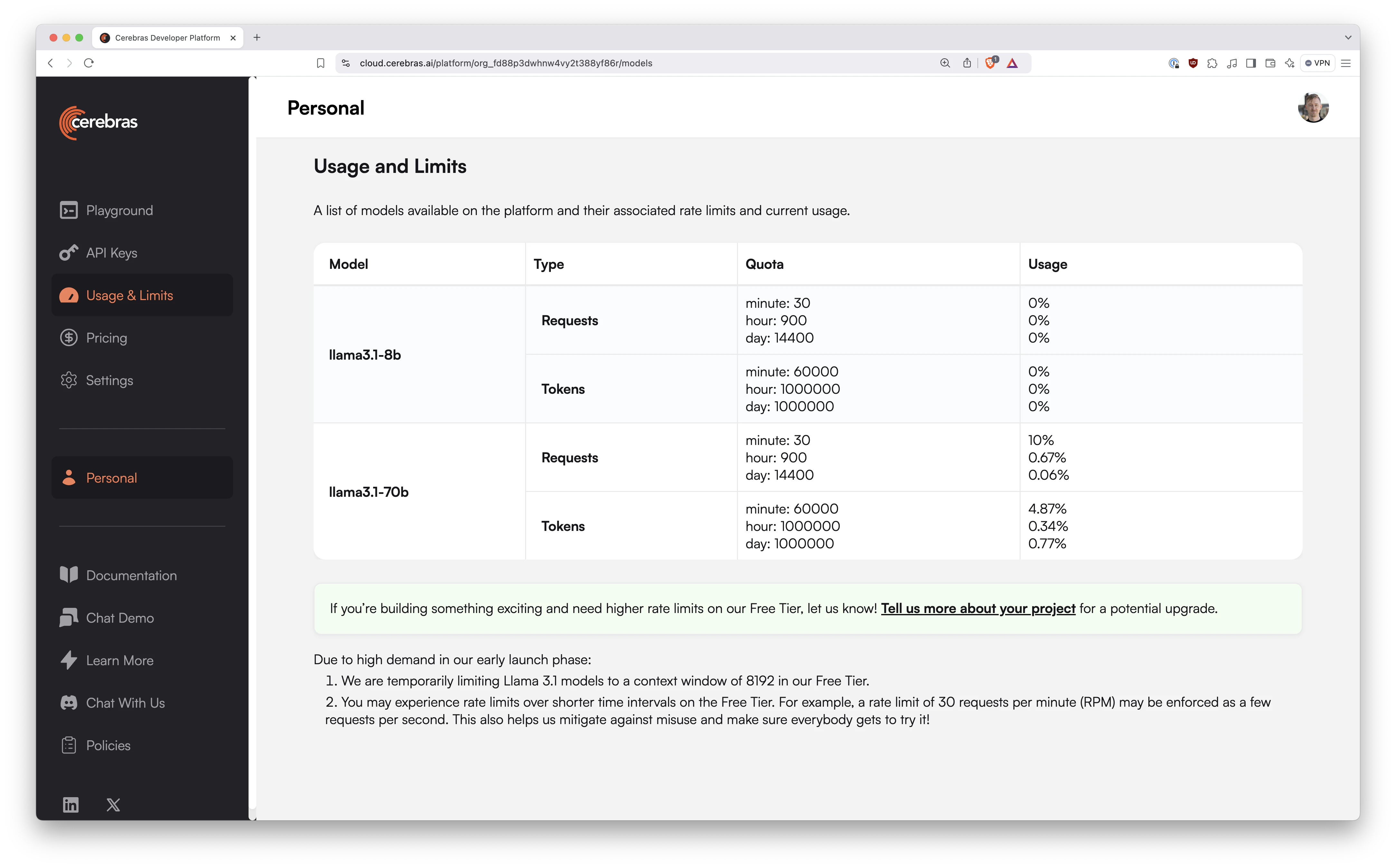

✨ Free Tier

Additionally you get a decent amount of free inference:

📝 Full Code

"""

title: Cerebras

description: https://cloud.cerebras.ai

author: Olof Larsson

author_url: https://olof.tech/cerebras-for-open-webui

version: 1.2.0

license: MIT

"""

import os

import time

import requests

from typing import List, Union, Generator, Iterator, Optional

from pydantic import BaseModel, Field

# Learn more here:

# https://olof.tech/cerebras-for-open-webui

class Pipe:

class Valves(BaseModel):

API_KEY: str = Field(

default=os.getenv("CEREBRAS_API_KEY", ""),

description="Get your key here: https://cloud.cerebras.ai",

)

def __init__(self):

self.type = "manifold"

self.id = "cerebras"

self.name = "cerebras/"

self.valves = self.Valves()

self.base_url = "https://api.cerebras.ai/v1"

def get_headers(self):

return {

"Content-Type": "application/json",

"Authorization": f"Bearer {self.valves.API_KEY}",

}

def get_model_ids_uncached(self):

headers = self.get_headers()

r = requests.get(f"{self.base_url}/models", headers=headers)

r.raise_for_status()

response = r.json()

models = response["data"]

return [model["id"] for model in models if "whisper" not in model["id"]]

def get_model_ids(self):

# Check if we have a cached result less than 10 minutes old and API key hasn't changed

current_time = time.time()

if (

hasattr(self, "_cached_models")

and hasattr(self, "_cache_time")

and hasattr(self, "_cache_api_key")

and self._cache_api_key == self.valves.API_KEY

):

if current_time - self._cache_time < 600: # 10 minutes

return self._cached_models

# Cache miss, expired, or API key changed - fetch new data

result = self.get_model_ids_uncached()

# Update cache with current API key

self._cached_models = result

self._cache_time = current_time

self._cache_api_key = self.valves.API_KEY

return result

def pipes(self) -> List[dict]:

model_ids = self.get_model_ids()

return [{"id": model_id, "name": model_id} for model_id in model_ids]

def pipe(

self, body: dict, __user__: Optional[dict] = None

) -> Union[str, Generator, Iterator]:

body["model"] = body["model"].removeprefix(self.id + ".")

headers = self.get_headers()

try:

r = requests.post(

url=f"{self.base_url}/chat/completions",

json=body,

headers=headers,

stream=True,

)

r.raise_for_status()

if body["stream"]:

return r.iter_lines()

else:

return r.json()

except Exception as e:

return f"Error: {e}"

🔗 Related Posts

I recently made Open WebUI plugins for all of the three big inference hardware companies: